Navigation

Welcome to Explainable AI!

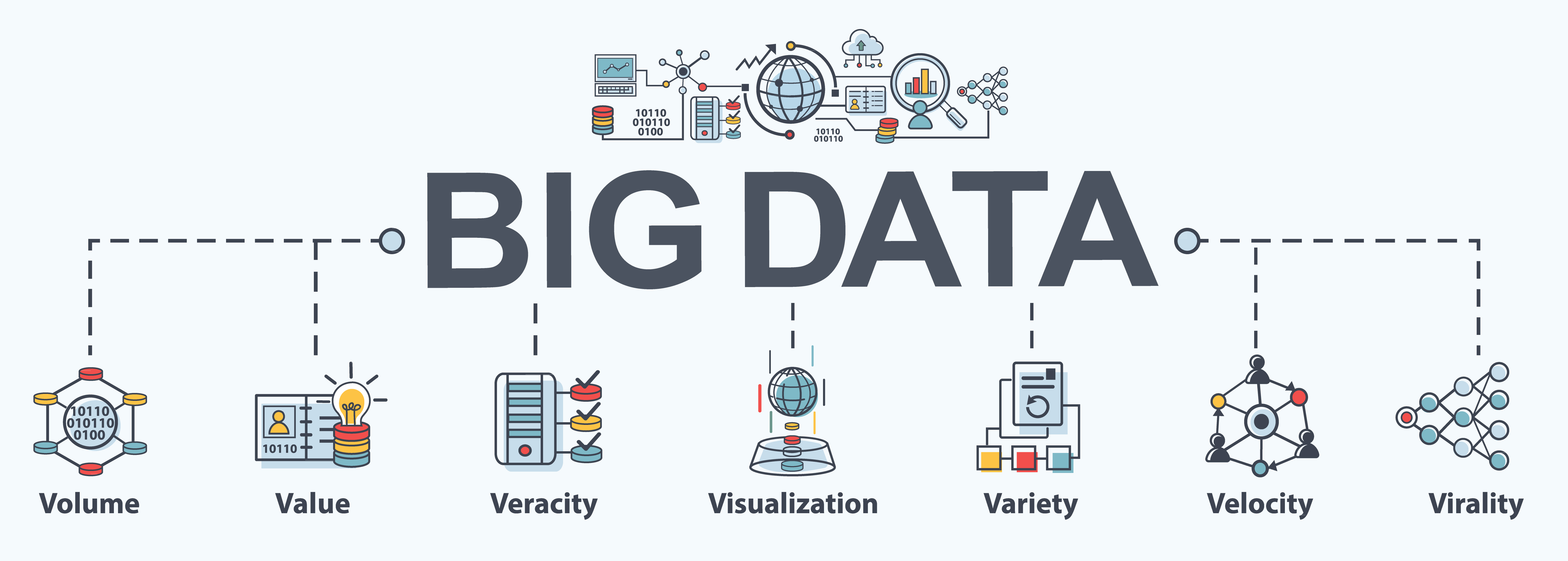

Image Source: Big Data

Throughout the past decade, several machine learning algorithms have been developed with distinct strengths and each different useful area of applications. While most traditional models have presented outstanding potentials in pattern recognitions for structured data, their computing power and learning abilities are however, not sufficient on large, complicated input dataset. To address this drawback, deep learning models are introduced, and have up to today – a proven record of impressive learning performances on large-scale data such as audios, images and even videos.

While prediction powers of these algorithms are not to be neglected, deep learning models however also come with a major problem that has been long troubling these algorithm users – that is the “un-explainability” of these algorithms.

In order to capture these complex patterns within datasets, deep learning models are by nature, very intricated in their architectures. This, however, makes it extremely difficult or most of the times, nearly impossible for users to manually track or inspect models’ learning processes. Although having a nice portfolio of model accuracies might be beneficial in performing prediction tasks, access to this training procedure is equally integral to understand how a projected outcome is made. In context of this project’s setting -- being able to tell why a model forecasted stock trends to behave in a specific way is crucial for users’ reference when making investment decisions. Regardless of disciplines for application, common concern users have raised with respect to deep learning algorithms is the difficulty in trusting model outcomes. Due to the lack of interpretability in model functionality, many researchers in fact choose to forfeit model accuracies in exchange for more trust and certainties in outputted results.

This project is thereby carefully structured after attempt to address this problem. We aim to add and build trust into deep learning systems by introducing more explainability into machine learning. Specifically, we chose one of the most complicated application for both prediction and explanation – and that is the area of finance, i.e., this research works towards introducing interpretability into modeling in the stock market, to enable users of more trustable insights and references during investment decision makings. In specific, we investigate the key indicator in stock investment – the stock price. Additionally, we pick to base the project on a diversified stock index – NIFTY 100 – in hope of a more systematic and wholistic view into the financial market. By inspecting deep learning model’s prediction on stock price behaviors, i.e., whether a stock price increases or decreases throughout daily trading period, we aim to provide an explainable view into such financial decisions made by computer algorithms.